Status 410 for better crawl budget and removing content from the Google index

We have all came across a "Error 404 page". You know the situation: you will click on the website's internal links and you will suddenly get the page containing the message: There was an error, the requested page was not found, try again or return to the home page.

404s are OK. A completely normal result of managing content on the Internet. Correcting these pages (and links) is part of the general hygiene of the website. Once a month, check if the error has occurred somewhere on the site and simply solve the problem.

404 pages and SEO

Where is the problem then when we talk about SEO and pages 404? The status 404 says: the content is not currently available, please try again later. And that's exactly what Google does. He regularly visits pages, even those that have returned the answer: The requested page was not found, try again later, I am a 404 page.

This is bad for your crawl budget (the amount of URLs Google analyzes when visiting your site). If you have a new site then the number 404s is low. But what if you bought an old domain that had a lot of content and you did not make redirects after you purchased a domain. Google will probably continue to visit a large number of these old URLs that you are not even aware of. Another example is when you may have purchased a new domain, but you have been redesigning the site couple of times so far. If you have not done the redirects of the old links then Google will constantly try to crawl bunch of those old links.

In the end, Google uses crawl budget for irrelevant things instead of going through what matters to you, through the fresh pages of your site.

Another problem with "Error 404 pages" are incoming links. It is not desirable that some of the inbound links point to 404. In that case the link value is completely lost, and we know that the link is not easy to get.

Therefore, 404s are affecing your SEO efforts.

How often does GoogleBot visit pages 404?

A simple and free way to check how often Google visits 404 is to analyze the log file from the server. Almost all hosting options have the option to download a log file, usually for the previous month. You can analyze the log file with many tools (free or paid), but I recommend the good old Excel.

Drag the file into Excel, format the content so that it is well distributed into columns (Table to Column option) and finally label the columns. For the columns: you will usually have the URL of the content that was visited, the date and time of the visit, who visited the content (bot or User agent), the server response (200, 301, 302, 404, 500 ...).

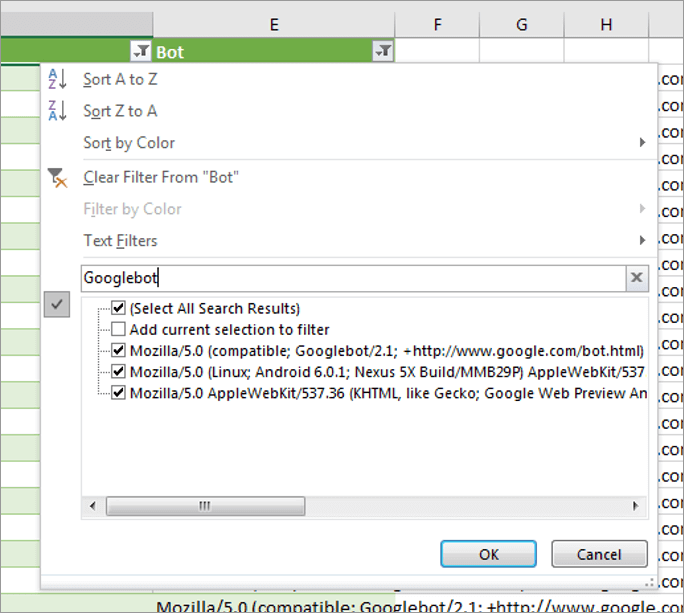

We simply filter a GoogleBot in the log file

Then we filter the bot column by inputting GoogleBot only. Then, in the server response column, I select 404. This gives me all the URLs visited by GoogleBot that returned the response 404.

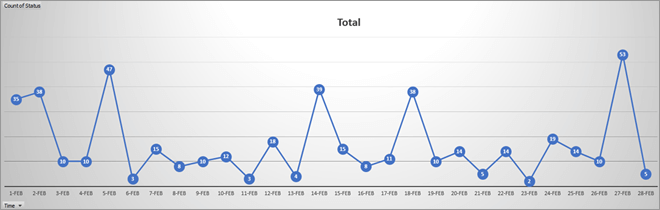

A little of Excel magic (pivoting and creating a chart) and we get the following.

Chart of GoogleBot's visits to the 404 pages

As you can see the Google's visits to the pages that return 404 are not negligible. Sometimes Google crawls over fourty of such URLs per day. And as you can see, Google does not stop, but returns almost every day.

Status 410 - Gone

If you checked out these old URLs and you saw that there are no inbound links to them, if you realized that there is no need to replicate the content those pages once had, then it is time for you to tell Google to stop accessing those pages.

Status 410 arrives to the stage. "Status 410 Gone" states: content is not available and will never be available again. When Google finds a URL that returns the status code 410, it will never visit the URL again, and the content will be deleted from the index.

Each technology or CMS has its own method of applying the status 410, but below you can see how to do it easily on the Apache server via the .htaccess code rule.

RewriteRule ^page\.html$ - [G,NC] or

redirect 410 /folder/page.html A simple and elegant solution that regulates the crawl budget and de-indexes content that we do not want on Google.